“You just need to stay 20 minutes ahead of everyone else,” a quote from a fellow AI enthusiast about trying to teach a topic you’ve only just learned.

In January, I watched a video by Riley Brown in which he offered an open invite to content creation community he was starting. Having just attempted to find such a group on Google a few weeks prior, I jumped at the opportunity. Riley wanted to create a free community where people could work together and level up their AI skills in content creation and automation skills, and me, I love free things.

I joined with an interest in everything image, video, and audio related because DIY book marketing requires wearing all the hats. But once I was in the community, I started paying attention to the automation work flows because I like learning and I could see the benefit. However, as Riley began focusing more on automation, I wanted to return to experimenting with the creative tooling because that’s where I got the most delight. I also wanted to help build the community and normalize sharing less-than-perfect outputs because the speed at which new AI technologies hit the consumer market makes it almost impossible to stay on top of everything, especially if you have anything else going on in your life. You spend a couple weeks learning a new technology and then another tool comes out that negates two steps of what you’ve just mastered. We can learn on our own, but in this rapidly-changing environment, I see it equally as valuable to learn from one another and therefore avoid common mistakes. However, that means sharing your process and admitting when you don’t know what you’re doing. That can be hard for some people, especially when you’re doing it in front of a bunch of strangers on the internet.

Luckily, as a principal product designer, I don’t have a problem sharing process. I’m used to starting with rough designs and working my way towards a polished solution with multiple iterations and lots of feedback. So I offered to run a series of events on image prompting and how one might integrate it into design workflows.

> Event Series Description: Starting with a logo for a fictional product, we’ll carry image generation from ideation to scalable vector, which we will then apply to AI generated product imagery through photo editing. Along the way, we’ll establish a color palette, prompt for website copy and generate secondary visuals that can all be tied together in a codeless website builder.

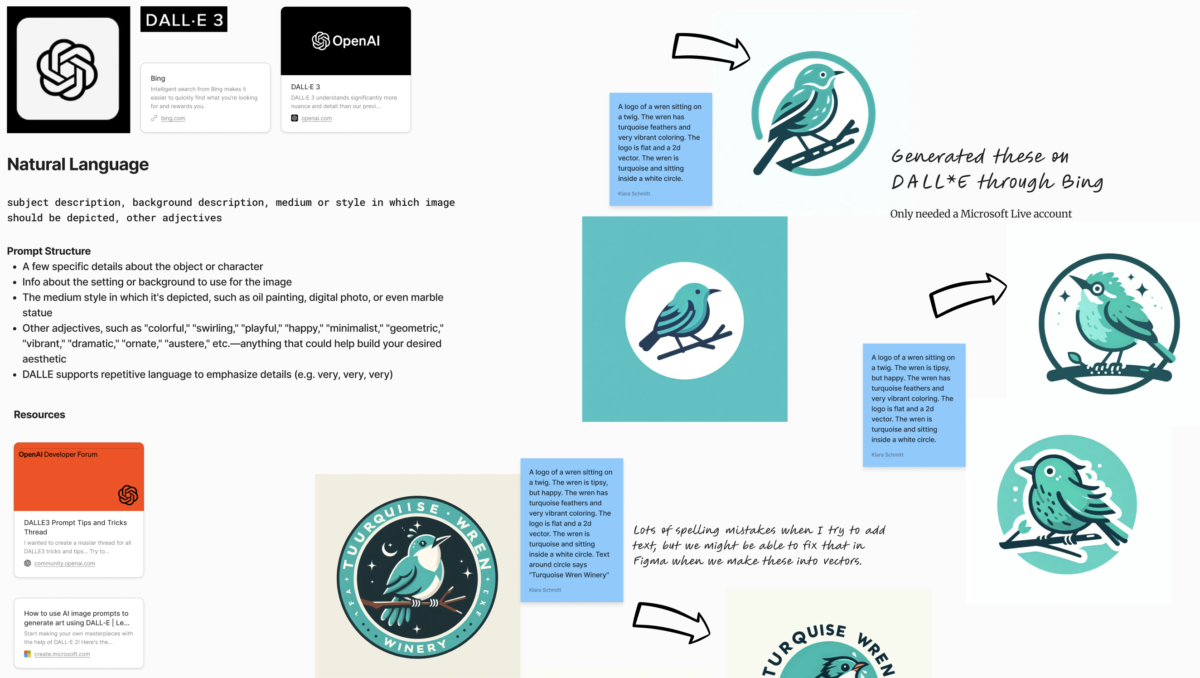

I thought I had a pretty good grasp of image prompting before I started the series, but I ended up learning so much more along the way. Up until my presentation, I had predominantly used Midjourney for personal projects. (Side note: This is all on me. My employer does not sanction Midjourney due to the potential copyright violations involved in the training data.) But Midjourney costs money and I certainly didn’t want to gate-keep AI image generation for other members of the community just because I could afford the paid subscription. This forced me to learn other engines (Stable Diffusion, DALLE 3) simply so I could teach other people. But by learning multiple systems, I began to understand their differences and when you might want to use one over another.

To my surprise, DALLE 3 performed really well on logos and even product imagery concepts. The only downside was that there was no good way to remix the images and Bing’s version of DALLE 3 doesn’t support inpainting. Additionally, halfway through my series, I learned that commercial use of Bing-generated DALLE 3 images was a little loose when it came to terms of use, so I’m glad we were only practicing with fake branding. I don’t know if the DALLE 3 OpenAI license is different because it’s attached to a paid subscription.

View full board with notes on prompt structure and logo samples

Learning more about Stable Diffusion also made me realize it was actually really powerful; it just had a very steep learning curve and people (like myself) who were running it on the Mac operating system were swimming against the current. Stable Diffusion works much better on a PC due to the dedicated VRAM and graphics cards.

Additionally, because Stable Diffusion is open-source, most of the free GUIs for interfacing with the engine provide very little instruction, so it feels completely overwhelming to get started. (It’s like, “Hey look, here’s 8 tabs, 10 accordions, and 30 checkboxes all labeled with a bunch of words that don’t sound remotely familiar.”) Plus, if you are trying to watching video tutorials to get started, those videos are often skewed towards Windows or Linux environments. For those who’ve started with Midjourney and might be on a Mac, this can feel quite jarring as we’re typically used to interfaces that allow plug-and-play with very little configuration. But this doesn’t mean, we should write it off.

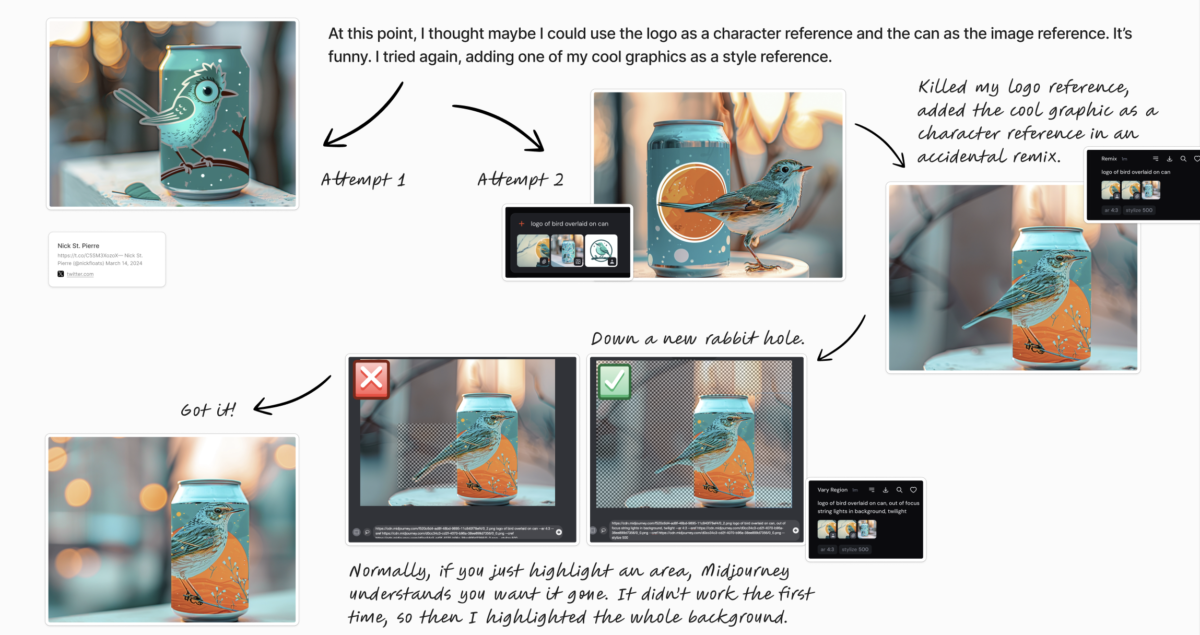

View full board with notes on trying to apply brand imagery to product concepts

Three times I mentioned to someone using Midjourney that I was trying to learn Stable Diffusion and three times I was told “[Stable Diffusion] isn’t very good, it’s not worth the effort” and I think that’s only because they didn’t really understand how to use it. For me personally, it wasn’t until I found https://civitai.com/ and installed a Mac app called Draw Things that blessedly had built-in helper text that I began to understand the concepts of models and LoRAs and what the heck ControlNet was.

Does that mean I’ve nailed how to prompt in Stable Diffusion? Not yet. But this is, in part, because I have a Mac with an intel core, which is not recommended for anything involving Stable Diffusion. (Not that it stops me from trying.) Generating two images with 30 steps via Draw Things and an illustration model took me twenty minutes and boy, my fans were really whirring. Generating one image with 30 steps using the SDXL model sped up the process to 3 minutes. But it’s still really slow compared to the 20 seconds it took a fellow community member who has a PC and to whom I’ve been peppering with questions as he explores the same technology. So now I find myself shopping around for a tower PC, even though it means I’m going to have to learn Windows command line next. But if there’s one lesson I took from hosting this event series, it is that the more versatile you are with what you’re willing learn and use, the more possibilities will be open to you in the future.